The Challenge

Wanelo came to us for help getting ready to handle a huge increase in traffic.

They had already seen traffic increase by a factor of about 5x over the previous year, and they knew that their existing systems wouldn’t be able to handle another increase of even 2x, let alone 5x.

Together we scaled their infrastructure to successfully handle a 7x increase in traffic from their previous peak capacity and architecting and implementing a database system with plenty of additional room to grow if needed. To do this we designed, built, and deployed a massively parallel system to process more data every day than they had previously processed in the course of weeks. We also trained junior and mid-level developers to make them more effective and productive team members.

Over the course of almost two years, we saw Wanelo grow their engineering team from about 10 to about 20 and increase the traffic they could handle from about 600 requests/second up to about 4200 requests/sec.

The Solution

Planning to handle dramatic scaling

At the beginning, they were sure they needed help getting ready to handle a huge increase in traffic. They had already seen traffic increase by a factor of about 5x over the previous year, and they knew that their existing systems wouldn’t be able to handle another increase of even 2x, let alone 5x.

We spent a few months discussing the current architecture, examining the strategies used by companies like Twitter to handle dramatic scaling, and planning out a new system that we thought would be able to handle the new traffic.

The biggest problem that we could foresee was that user data was growing so fast it would soon overwhelm the largest database server that we could buy, at any price. After the planning phase was over, we spent a few months implementing.

We built a custom web service with a JSON API to replace their existing database of product lists saved by users. We designed a system for sharding the data, writing a web service with an HTTP API that exposed the data across all shards, and then wrote a client library that acted as a drop-in replacement for ActiveRecord for the newly sharded data. The data set was sharded across hundreds of logical nodes, hosted on dozens of physical nodes, with plenty of room to grow more in the future.

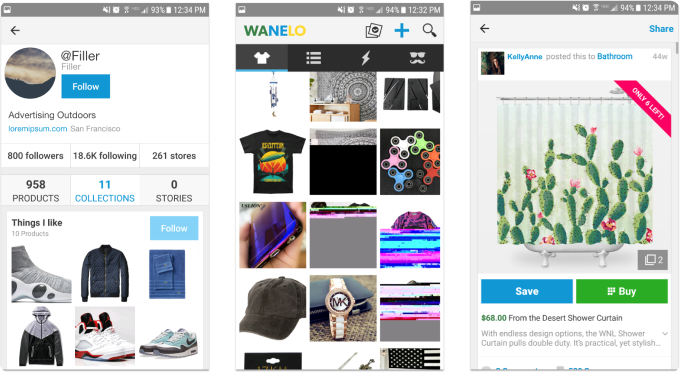

Fast display of diverse product images

Wanelo needed many sizes of images, but Ruby was too slow to resize images on the fly. We had been working around it by slowly resizing images in Ruby to every possible size we might use, even for sizes we never actually used in real life, which was costly both in time and in storage fees.

During a freeform hack day, one of the devs built a proof of concept resize-on-demand image service in Go. We spent a month or two helping fix bugs in that service, writing Chef code to automate deploying it, and reworking the existing systems to use the new service.

Accurate catalog information on over 30 million products from 550,000 stores

Wanelo wanted up-to-date information about whether the products in their database were in stock at the online stores that sold them. We worked with them on a system that could download and update information about hundreds of thousands of products from thousands of stores on a nightly basis. We built a system in Ruby to handle fetching, processing, and updating entire store catalogs in parallel, and launched it successfully.